Research Overview

For a comprehensive synthesis of my work and future research vision, please see my formal statement below.

Download Research Statement

Local EGOP Learning

Kokot et al. (2025) · Submitted to ICML

A geometric model for structured data via the supervised noisy manifold hypothesis. We develop a method to capture the adaptivity deep networks achieve when the target function exhibits local low-dimensionaltity.

Coreset Selection

Kokot & Luedtke (2025) · Submitted to JMLR

A framework for selecting coresets with respect to arbitrary losses, including the Sinkhorn divergence.

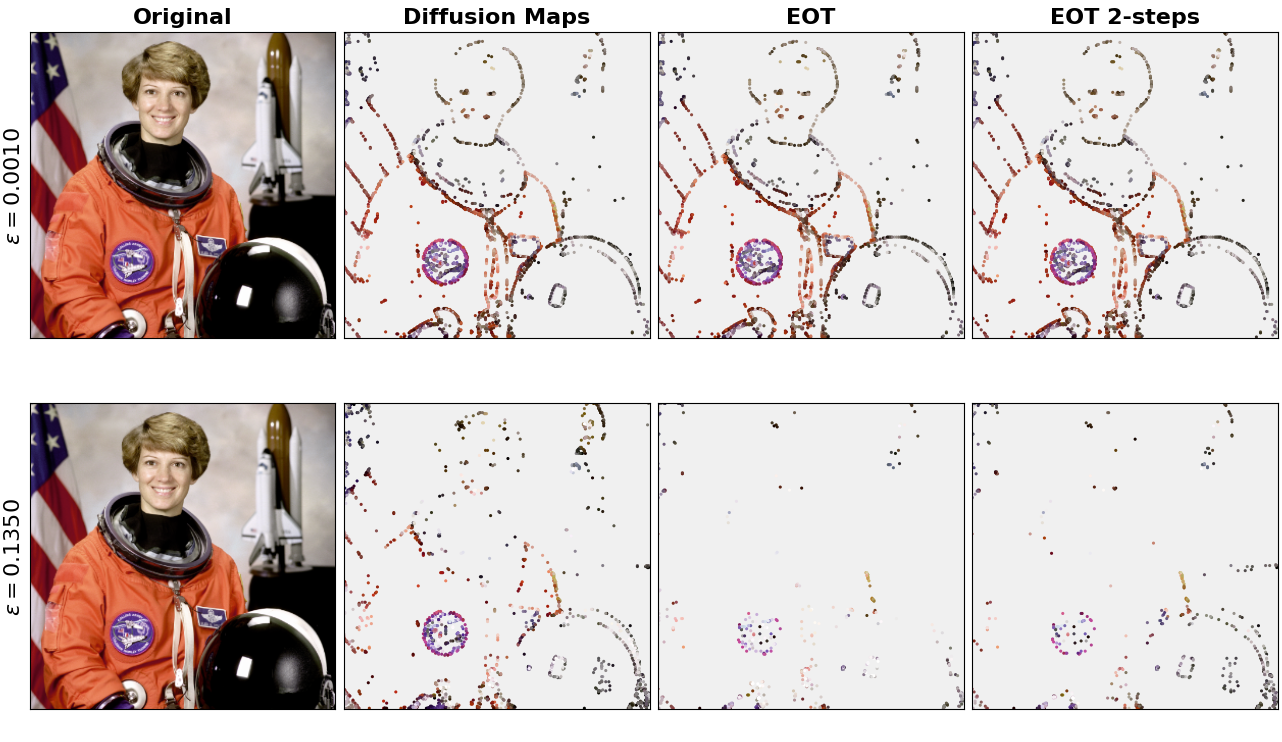

Entropic Optimal Transport

Kokot · To be Submitted to SIMODS

Refining the analysis of the Sinkhorn divergence via Hadamard differentiability and deriving limits for self-EOT, establishing connections to classical estimators in density and score estimation.

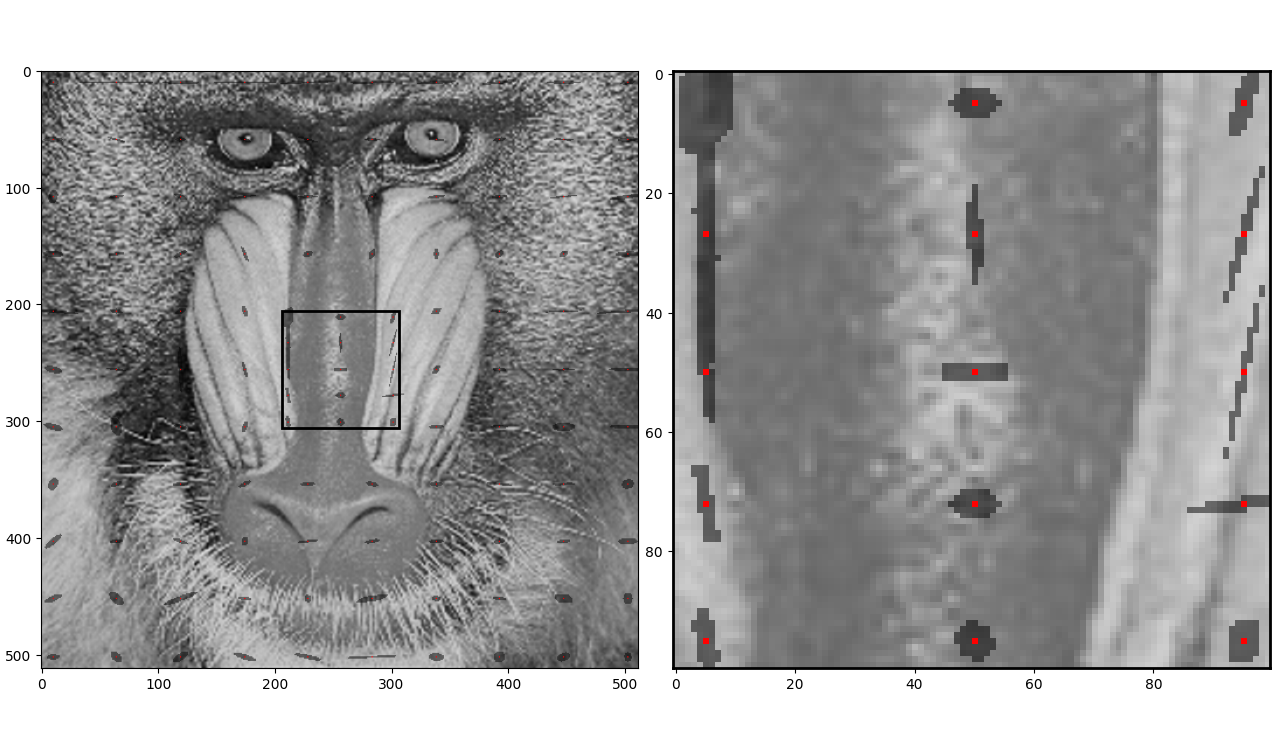

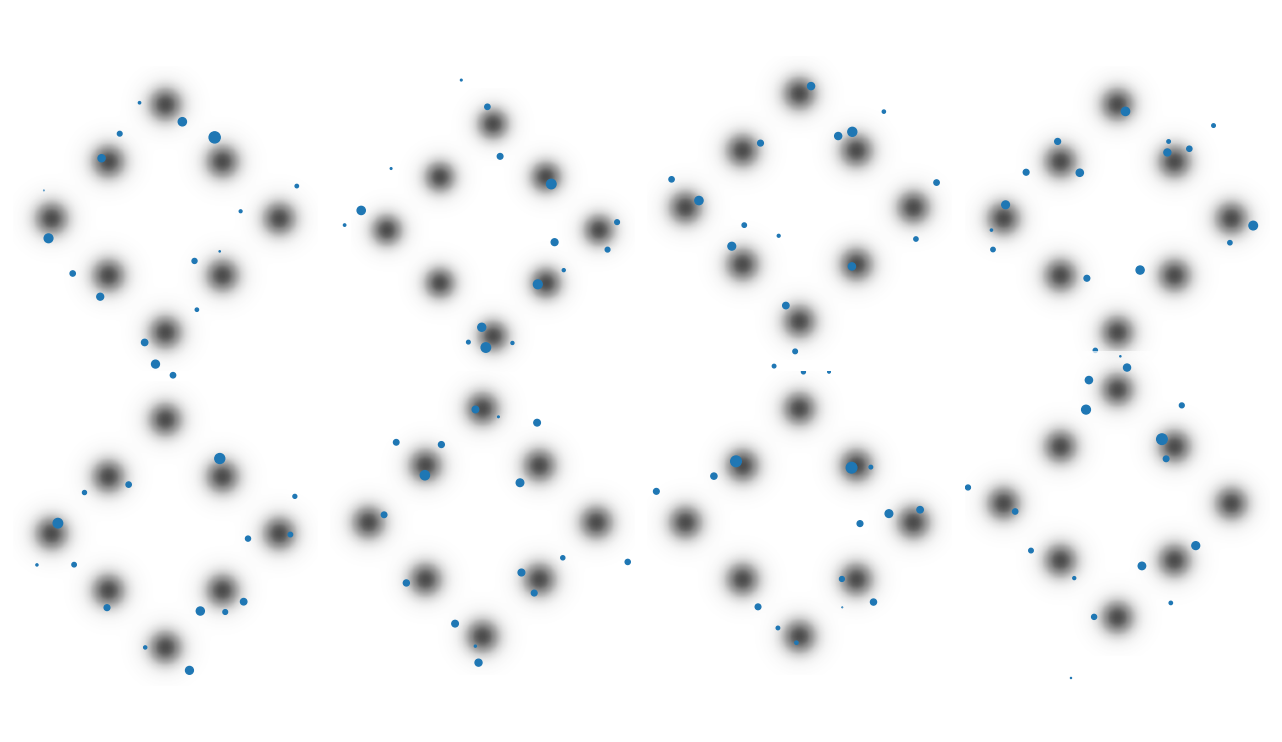

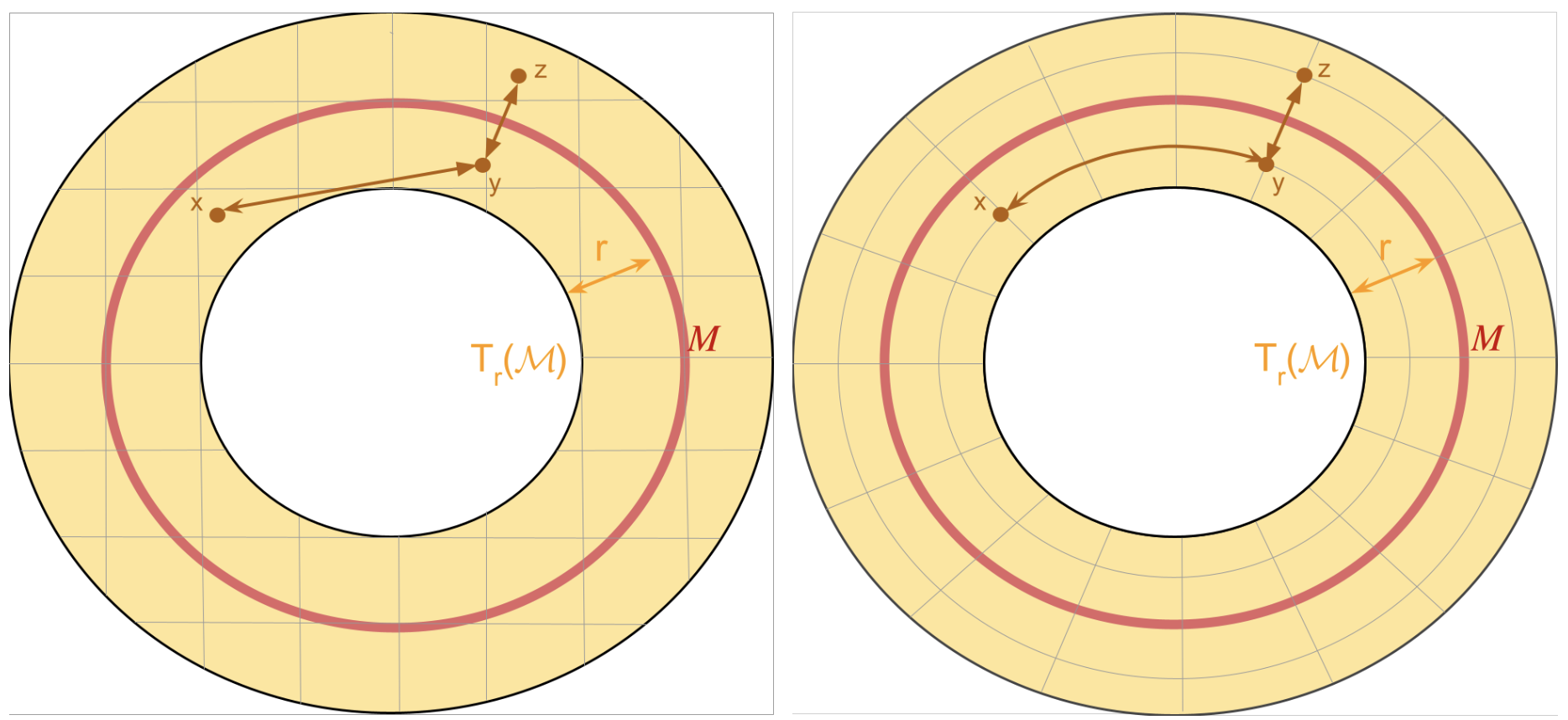

Geometrically Structured Data

Kokot, Murad, & Meila (2025) · ICML 2025

Rigorous analysis of spectral embeddings on noisy manifolds. Using the Sasaki metric, we show these embeddings detect structure beyond strict dimensionality.

Full Publication List

In Preparation

- Alex Kokot, Alex Luedtke, Marina Meila (2026). Diffusion Maps are Entropic Self-Transport: Limits and Applications as ε→0. To be submitted to SIMODS.

Submitted

- Vydhourie Thiyageswaran, Alex Kokot, et al. (2026). Optimal Design under Interference, Homophily, and Robustness Trade-offs. Submitted to JASA.

- Alex Kokot, et al. (2026). Local EGOP for Continuous Index Learning. arXiv:2601.07061. Submitted to ICML.

- Alex Kokot, Alex Luedtke (2025). Coreset selection for the Sinkhorn divergence and generic smooth divergences. arXiv:2504.20194. Submitted to JMLR (under review).

Peer-Reviewed

- Alex Kokot, Octavian-Vlad Murad, Marina Meila (2025). The Noisy Laplacian: A threshold phenomenon for non-linear dimension reduction. In Proc. 42nd Int’l Conf. on Machine Learning (ICML 2025).

- Jose Agudelo, Brooke Dippold, Ian Klein, Alex Kokot, Eric Geiger, Irina Kogan (2024). Euclidean and affine curve reconstruction. Involve, a Journal of Mathematics.

- Lei Zhang, Yu Wang, Mengyu Xu, Alex M. Kokot, Jie Qiu, Peter C. Burns (2024). Hydrothermal synthesis and structure of organically templated layered neptunyl(VI) phosphate (NpO2)3(PO4)2(Terpy). CrystEngComm.

- Hrafn Traustason, Nicola L. Bell, Kiana Caranto, David C. Auld, David T. Lockey, Alex M. Kokot, Jennifer E. S. Szymanowski, Leroy Cronin, Peter C. Burns (2020). Reactivity, Formation, and Solubility of Polyoxometalates Probed by Calorimetry. Journal of the American Chemical Society.

- Lei Zhang, Sergey M. Aksenov, Alex Kokot, Samuel N. Perry, Travis A. Olds, Peter C. Burns (2020). Crystal Chemistry and Structural Complexity of Uranium(IV) Sulfates. Inorganic Chemistry.

- Kulick, J., Nichols, B., Knight, T., Lu, T., Ortega, C., Siders, S., Kokot, A., Bernstein, G. (2019). Enabling curved hemispherical arrays with Quilt Packaging interconnect technology. In Proc. SPIE 10980.

- Jie Qiu, Tyler L. Spano, Mateusz Dembowski, Alex Kokot, Jakub E. S. Szymanowski, Peter C. Burns (2017). Sulfate-Centered Sodium-Icosahedron-Templated Uranyl Peroxide Phosphate Cages. Inorganic Chemistry.